The New DDoS Landscape

<p>News outlets and blogs will frequently compare DDoS attacks by the volume of traffic that a victim receives. Surely this makes some sense, right? The greater the volume of traffic a victim receives, the harder to mitigate an attack - right? </p>

<p>News outlets and blogs will frequently compare DDoS attacks by the volume of traffic that a victim receives. Surely this makes some sense, right? The greater the volume of traffic a victim receives, the harder to mitigate an attack - right? </p>

At least, this is how things used to work. An attacker would gain capacity and then use that capacity to launch an attack. With enough capacity, an attack would overwhelm the victim's network hardware with junk traffic such that they can no longer serve legitimate requests. If your web traffic is served by a server with a 100 Gbps port and someone sends you 200 Gbps, your network will be saturated and the website will be unavailable.

Recently, this dynamic has shifted as attackers have gotten far more sophisticated. The practical realities of the modern Internet have increased the amount of effort required to clog up the network capacity of a DDoS victim - attackers have noticed this and are now choosing to perform attacks higher up the network stack.

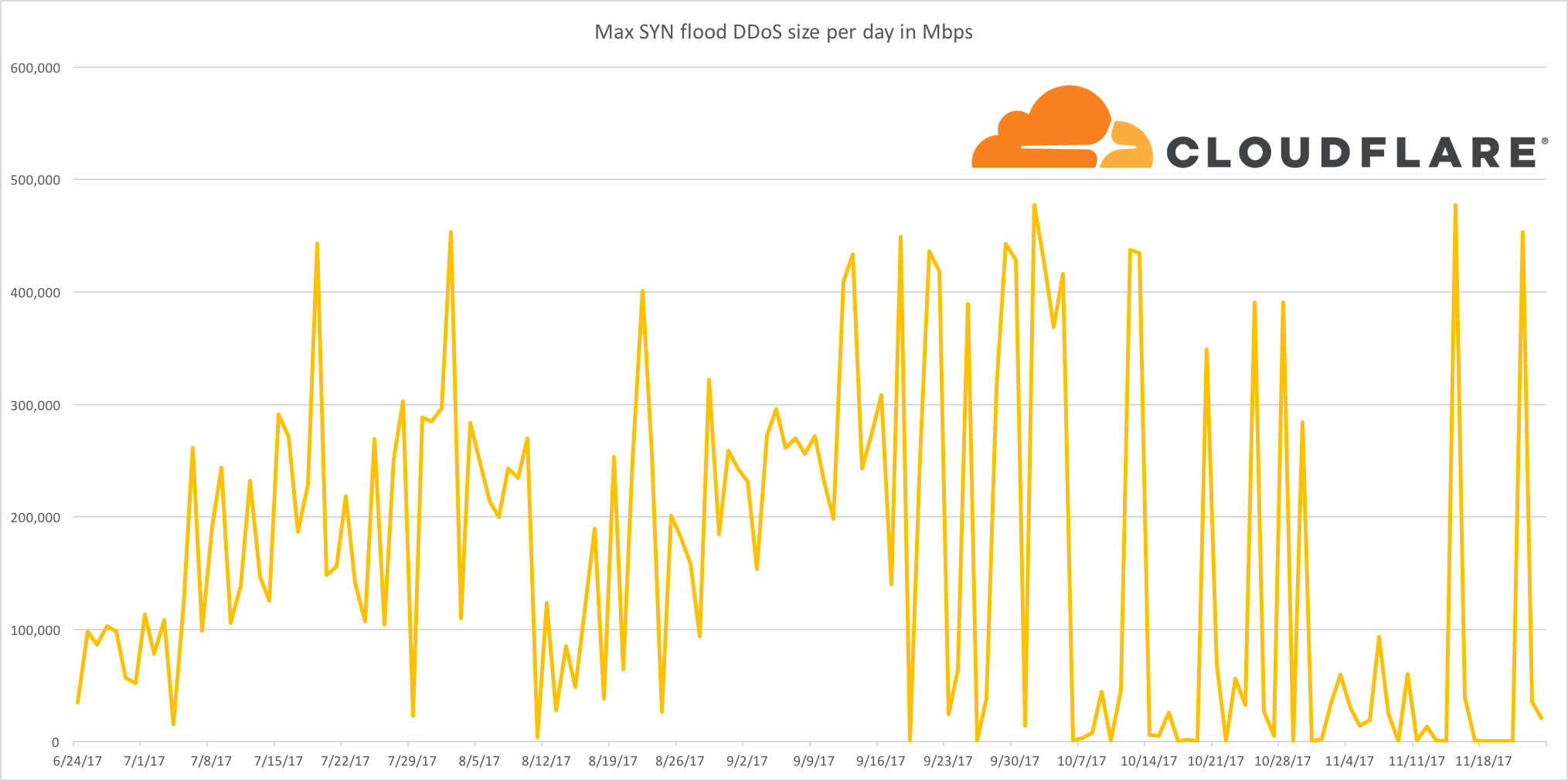

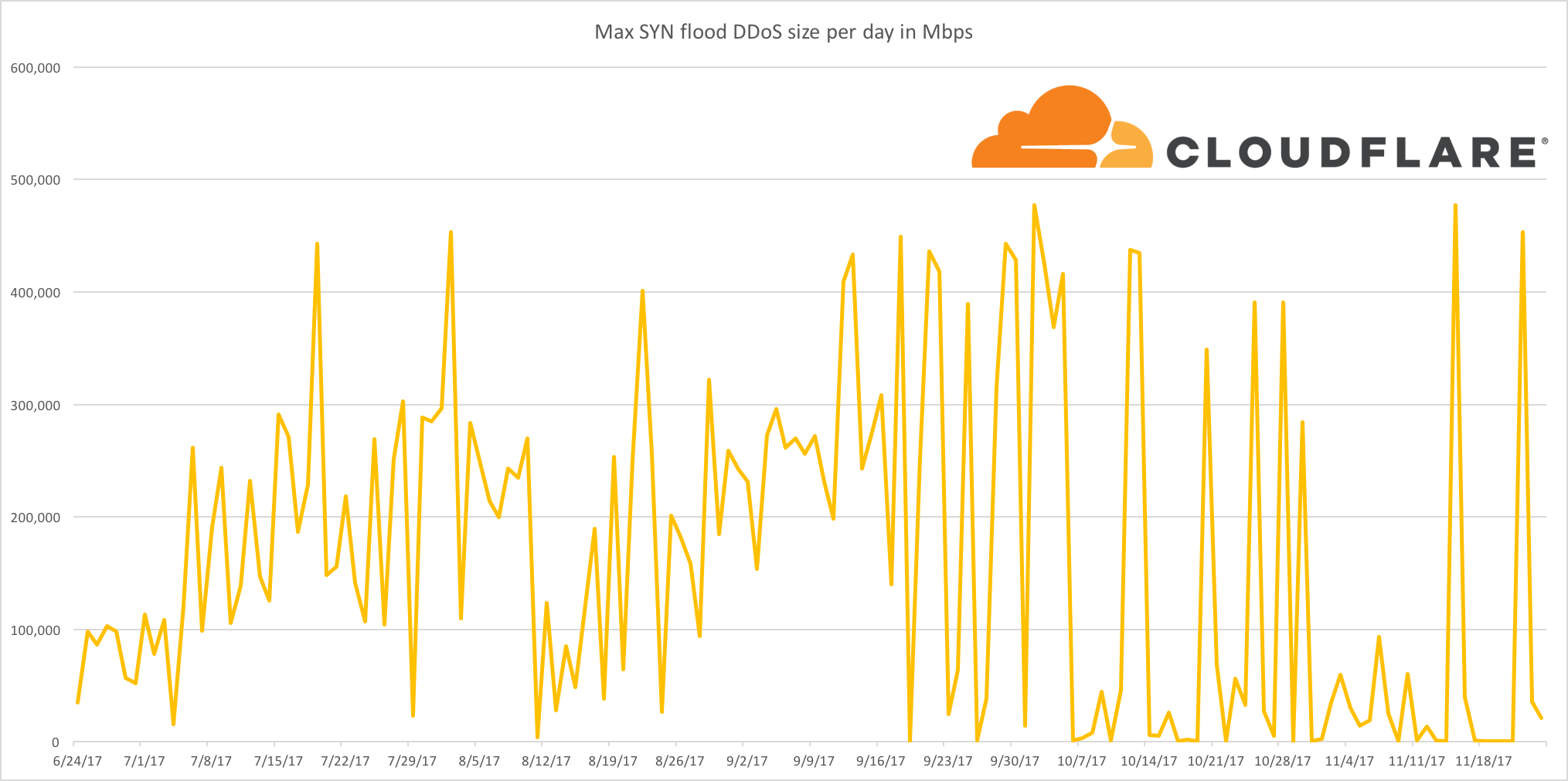

In recent months, Cloudflare has seen a dramatic reduction in simple attempts to flood our network with junk traffic. Whilst we continue to see large network level attacks, in excess of 300 and 400 Gbps, network level attacks in general have become far less common (the largest recent attack was just over 0.5 Tbps). This has been especially true since the end of September when we made official a policy that would not remove any customers from our network merely for receiving a DDoS attack that's too big, including those on our free plan.

Far from attackers simply closing shop, we see a trend whereby attackers are moving to more advanced application-layer attack strategies. This trend is not only seen in metrics from our automated attack mitigation systems, but has also been the experience of our frontline customer support engineers. Whilst we continue to see very large network level attacks, note that they are occurring less frequently since the introduction of Unmetered Mitigation:

To understand how the landscape has made such a dramatic shift, we must first understand how DDoS attacks are performed.

Performing a DDoS

From presentation by @IcyApril - First thing you absolutely need for a successful DDoS - is a cool costume. pic.twitter.com/WIC0LjF4ka

— majek04 (@majek04) November 22, 2017

The first thing you need before you can carry out a DDoS Attack is capacity. You need the network resources to be able to overwhelm your victim.

To build up capacity, attackers have a few mechanisms at their disposal; three such examples are Botnets, IoT Devices and DNS Amplification:

Botnets

Computer viruses are deployed for multiple reasons, for example; they can harvest private information from users or be blackmail users into paying them money to get their precious files back. Another utility of computer viruses is building capacity to perform DDoS Attacks.

A Botnet is a network of infected computers that are centrally controlled by an attacker; these zombie computers can then be used to send spam emails or perform DDoS attacks.

Consumers have access to faster Internet than ever before. In November 2016, the average UK broadband download speed reached 36.2Mbps - this means a Botnet which has infected a little under 285 computers can launch an attack of around 10Gbps. Such capacity is plenty enough to saturate the end networks that power most websites online.

On August 17th, 2017, multiple networks online were subject to significant attacks from a botnet known as WireX. Researchers from a variety of organisations, including from Akamai, Cloudflare, Flashpoint, Google, Oracle Dyn, RiskIQ, Team Cymru, and other organizations cooperated to combat this botnet - eventually leading to hundreds of Android apps being removed and a process started to remove the malware-ridden apps from all devices.

IoT Devices

More and more of our everyday appliances are being embedded with Internet connectivity. Like other types of technology, they can be taken over with malware and controlled to launch large-scale DDoS attacks.

Towards the end of last year, we began to see Internet-connected cameras start to launch large DDoS attacks. The use of video cameras was advantageous to attackers in the sense they needed to be connected to networks with enough bandwidth to be capable of streaming video.

Mirai was one such botnet which targeted Internet-connected cameras and Internet routers. It would start by logging into the web dashboard of a device using a table of 60 default usernames and passwords, then installing malware on the device.

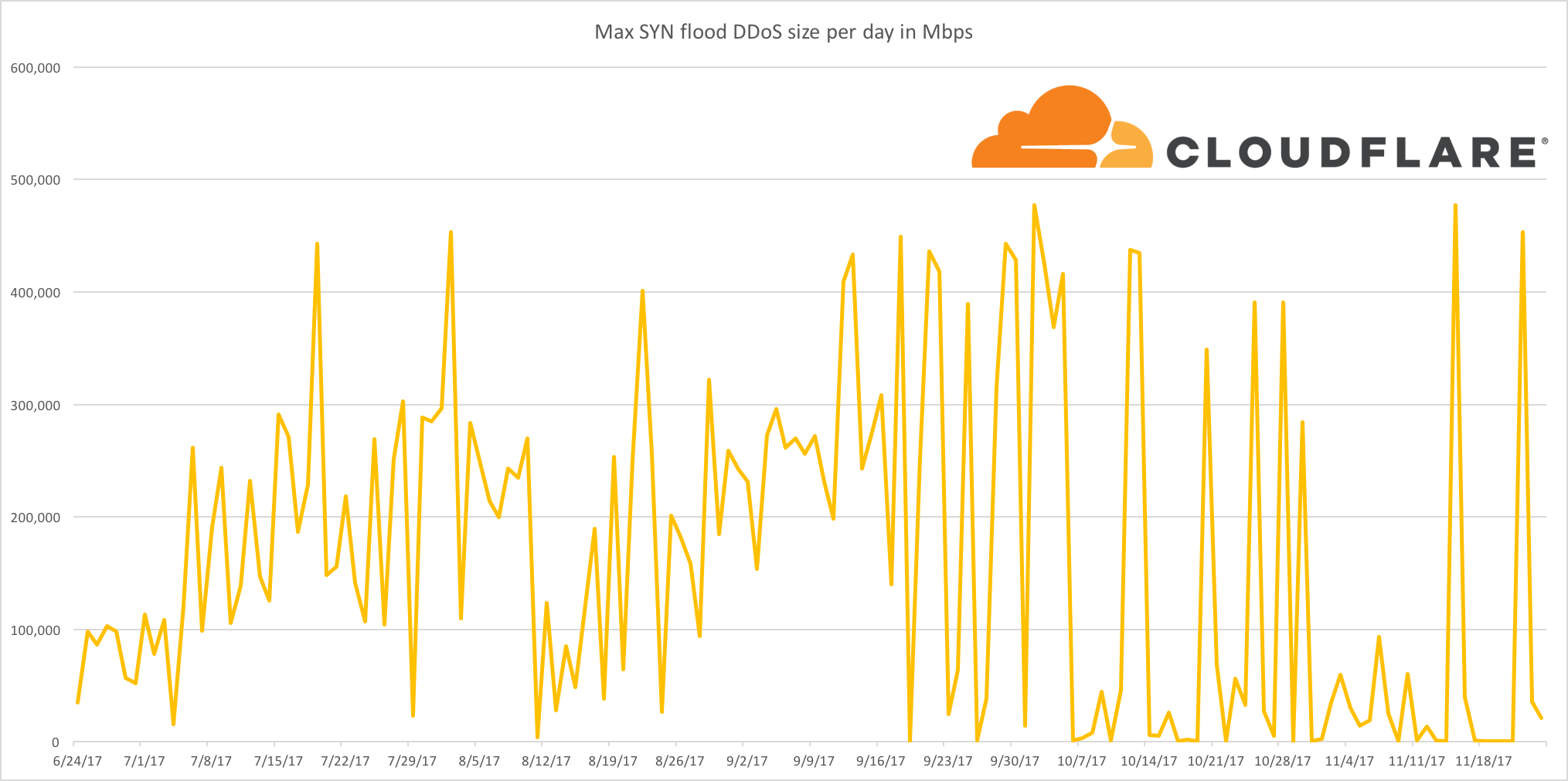

Where users set passwords instead of them merely being the default, other pieces malware can use Dictionary Attacks to repeatedly guess simple user-configured passwords, using a list of common passwords like the one shown below. I have self-censored some of the passwords, apparently users can be in a fairly angry state-of-mind when setting passwords:

Passwords aside, back in May, I blogged specifically about some examples of security risks we are starting to see which are specific to IoT devices: IoT Security Anti-Patterns.

DNS Amplification

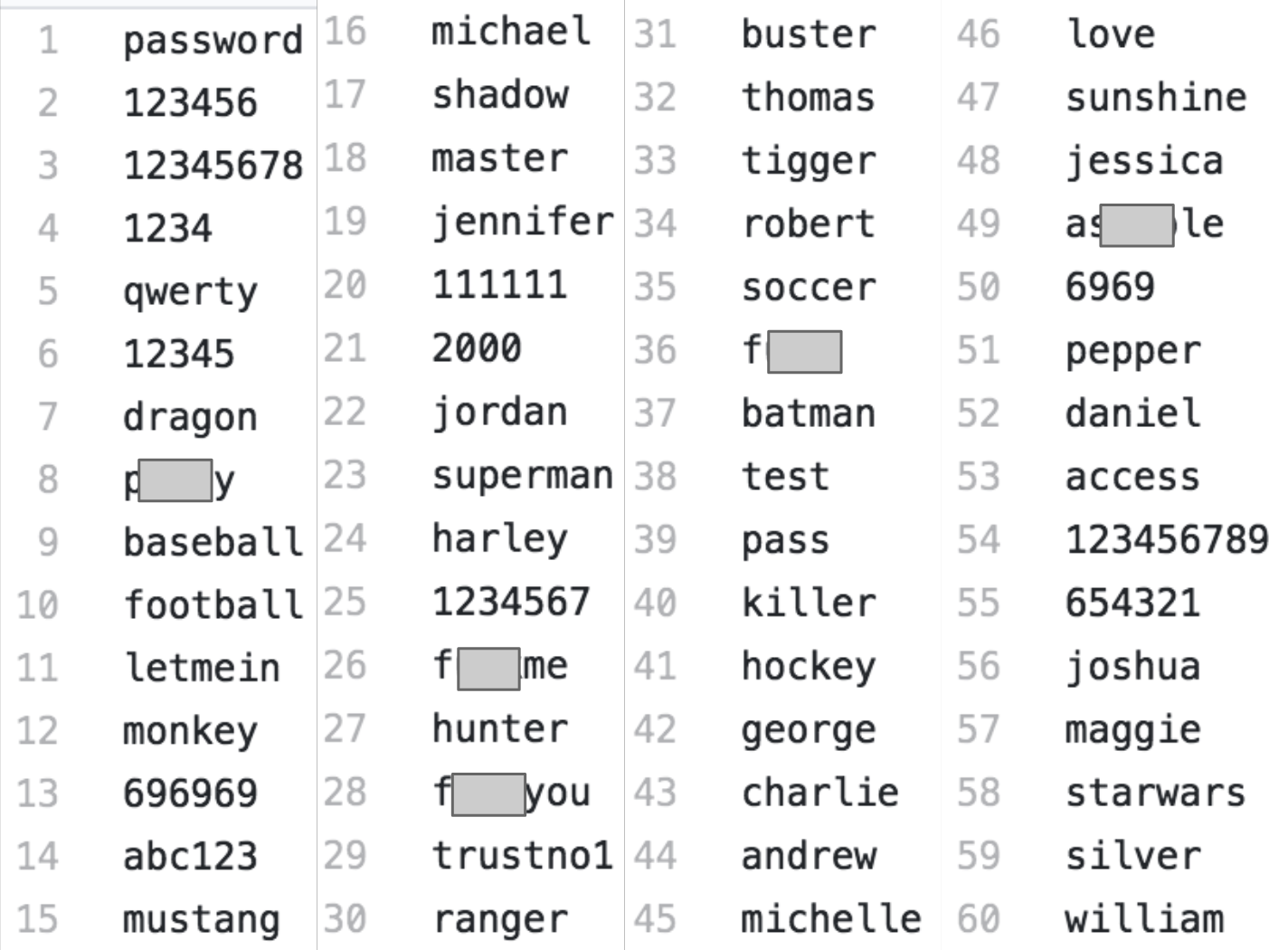

DNS is the phonebook of the Internet; in order to reach this site, your local computer used DNS to look up which IP Address would serve traffic for blog.cloudflare.com. I can perform this DNS queries from my command line using dig A blog.cloudflare.com:

Firstly notice that the response is pretty big, it's certainly bigger than the question we asked.

DNS is built on a Transport Protocol called UDP, when using UDP it's easy to forge the requester of a query as UDP doesn't require a handshake before sending a response.

Due to these two factors, someone is able to make a DNS query on behalf of someone else. We can make a request for a relatively small DNS query, which will will then result in a long response being sent somewhere else.

Online there are open DNS resolvers that will take a request from anyone online and send the response to anyone else. In most cases we should not be exposing open DNS resolvers to the internet (most are open due to configuration mistakes). However, when intentionally exposing DNS resolvers online, security steps should be taken - StrongArm have a primer on securing open DNS resolvers on their blog.

Let's use a hypothetical to illustrate this point. Imagine that you wrote to a mail order retailer requesting a catalogue (if you still know what one is). You'd send a relatively short postcard with your contact information and your request - you'd then get back quite a big catalog. Now imagine you did the same, but sent hundreds of these postcards and instead included the address of someone else. Assuming the retailer was obliging in sending such a vast amount of catalogues, your friend could wake up one day with their front door blocked with catalogues.

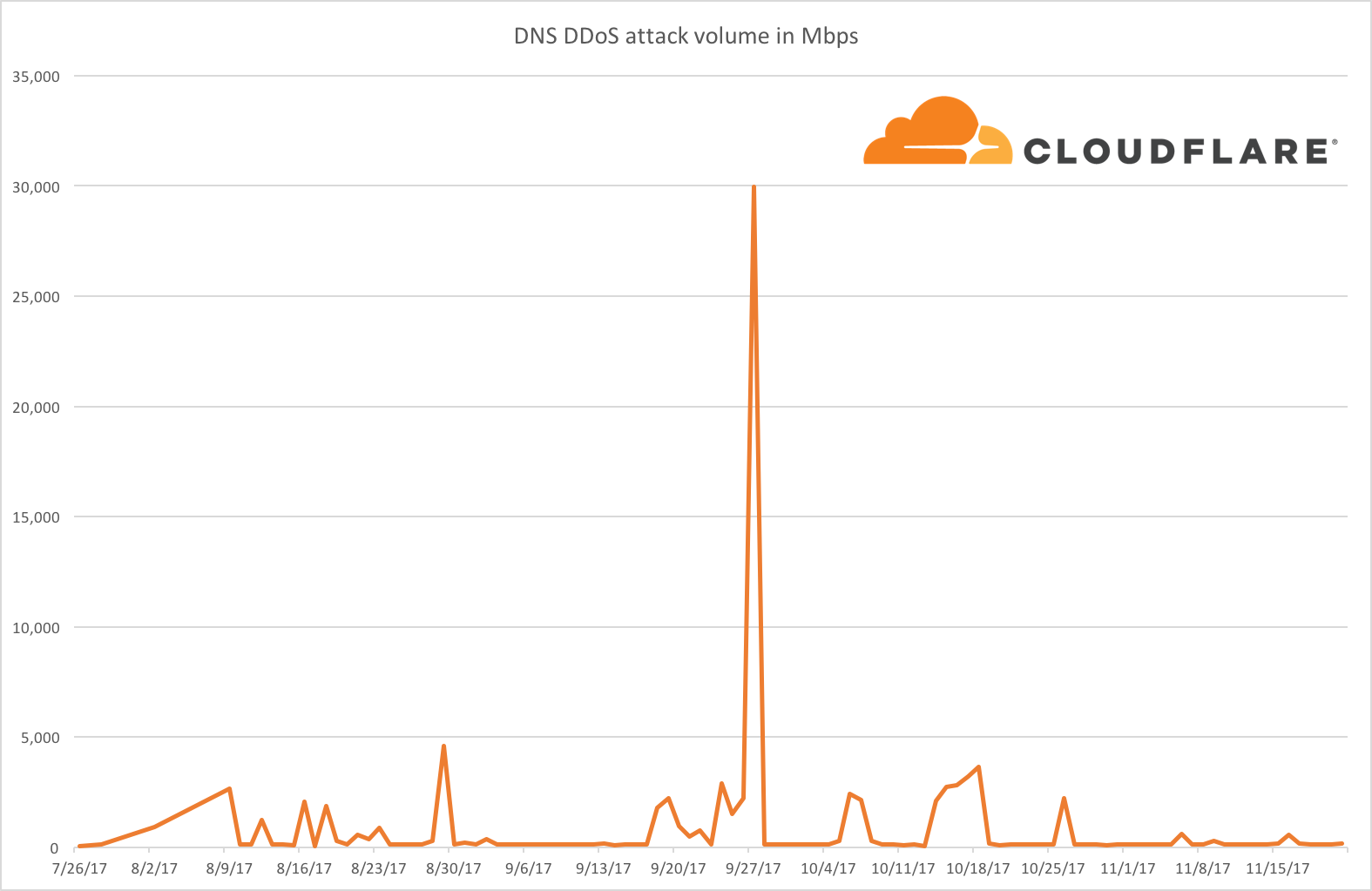

In 2013, we blogged about how one DNS Amplification attack we faced almost broke the internet; however, in recent times aside from one exceptionally high attack (occurring just after we launched Unmetered DDoS Mitigation), DNS Amplification attacks have generally been a low proportion of attacks we see recently:

Whilst we're seeing fewer of these attacks, you can find a more detailed overview on our learning centre: DNS Amplification Attack

DDoS Mitigation: The Old Priorities

Using the capacity an attacker has built up, they can send junk traffic to a web property. This is referred to as a Layer 3/4 attack. This kind of attack primarily seeks to block up the network capacity of the victim network.

Above all, mitigating these attacks requires capacity. If you get an attack of 600 Gbps and you only have 10 Gbps of capacity you either need to pay an intermediary network to filter traffic for you or have your network go offline due to the force of the attack.

As a network, Cloudflare works by passing a customer's traffic through our network; in doing so, we are able to apply performance optimisations and security filtering to the traffic we see. One such security filter is removing junk traffic associated with Layer 3/4 DDoS attacks.

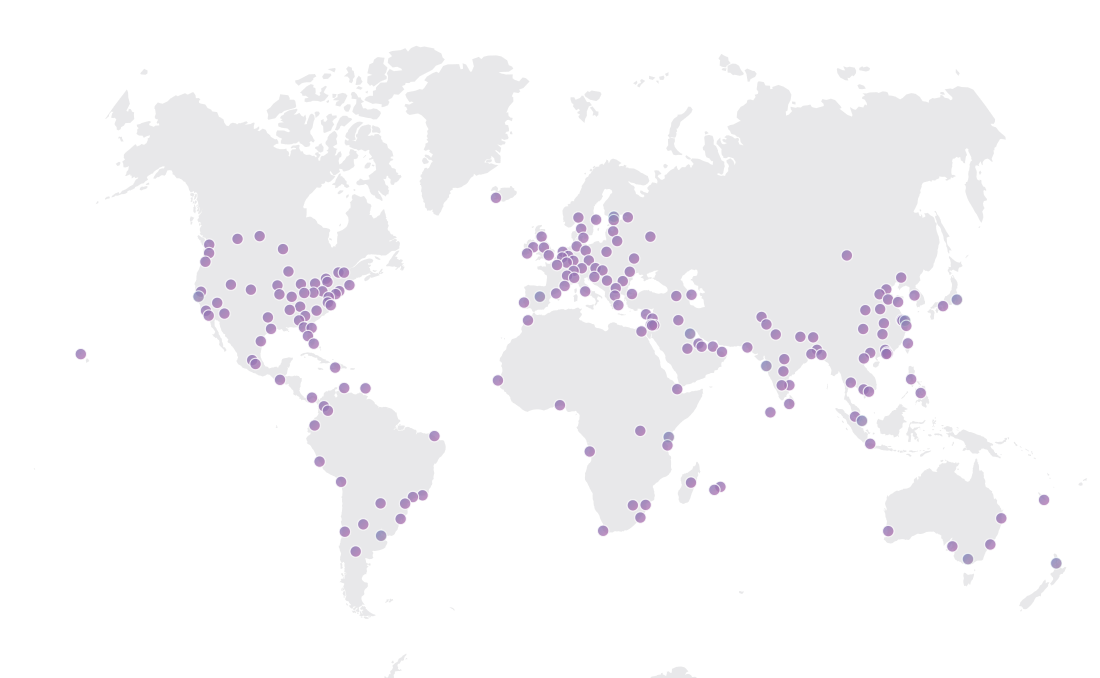

Cloudflare's network was built when large scale DDoS Attacks were becoming a reality. Huge network capacity, spread out over the world in many different data centres makes it easier to absorb large attacks. We currently have over 15 Tbps and this figure is always growing fast.

Preventing DDoS attacks needs a little more sophistication than just capacity though. While traditional Content Delivery Networks are built using Unicast technology, Cloudflare's network is built using an Anycast design.

In essence, this means that network traffic is routed to the nearest available Point-of-Presence and it is not possible for an attacker to override this routing behaviour - the routing is effectively performed using BGP, the routing protocol of the Internet.

Unicast networks will frequently use technology like DNS to steer traffic to close data centres. This routing can easily be overridden by an attacker, allowing them to force attack traffic to a single data centre. This is not possible with Cloudflare's Anycast network; meaning we maintain full control of how our traffic is routed (providing intermediary ISPs respect our routes). With this network design, have the ability to rapidly update routing decisions even against ISPs which ordinarily do not respect cache expiration times for DNS records (TTLs).

Cloudflare's network also maintains an Open Peering Policy; we are open to interconnecting our network with any other network without cost. This means we tend to eliminate intermediary networks across our network. When we are under attack, we usually have a very short network path from the attacker to us - this means there are no intermediary networks which can suffer collateral damage.

The New Landscape

I started this blog post with a chart which demonstrates the frequency of a type of network-layer attack known as a SYN Flood against the Cloudflare network. You'll notice how the largest attacks are further spaced out over the past few months:

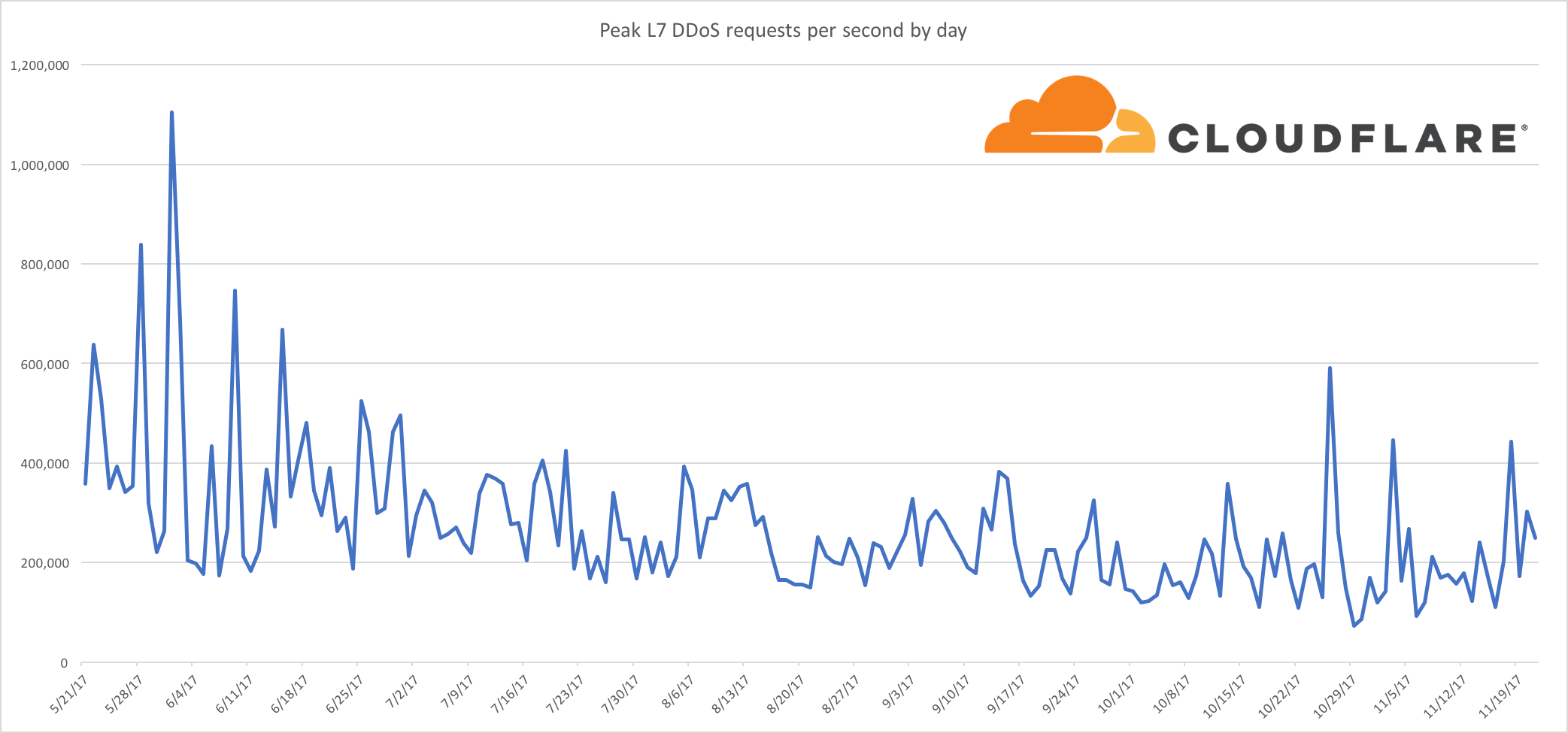

You can also see that this trend does not follow when compared to a graph of Application Layer DDoS attacks which we continue to see coming in:

The chart above has an important caveat, an Application Layer attack is defined by what we determine an attack is. Application Layer (Layer 7) attacks are more indistinguishable from real traffic than Layer 3/4 attacks. The attacks effectively resemble normal web requests, instead of junk traffic.

Attackers can order their Botnets to perform attacks against websites using "Headless Browsers" which have no user interface. Such Headless Browsers work exactly like normal browsers, except that they are controlled programmatically instead of being controlled via a window on a user's screen.

Botnets can use Headless Browsers to effectively make HTTP requests that load and behave just like ordinary web requests. As this can be done programmatically, they can order bots to repeat these HTTP requests rapidly - effectively taking up the entire capacity of a website, taking it offline for ordinary visitors.

This is a non-trivial problem to solve. At Cloudflare, we have specific services like Gatebot which identify DDoS attacks by picking up on anomalies in network traffic. We have tooling like "I'm Under Attack Mode" to analyse traffic to ensure the visitor is human. This is, however, only part of the story.

A $20/month server running a resource intense e-commerce platform may not be able to cope with any more than a dozen concurrent HTTP requests before being unable to serve any more traffic.

An attack which can take down a small e-commerce site will likely not even be a drop in the ocean for Cloudflare's network, which sees around 10% of Internet requests online.

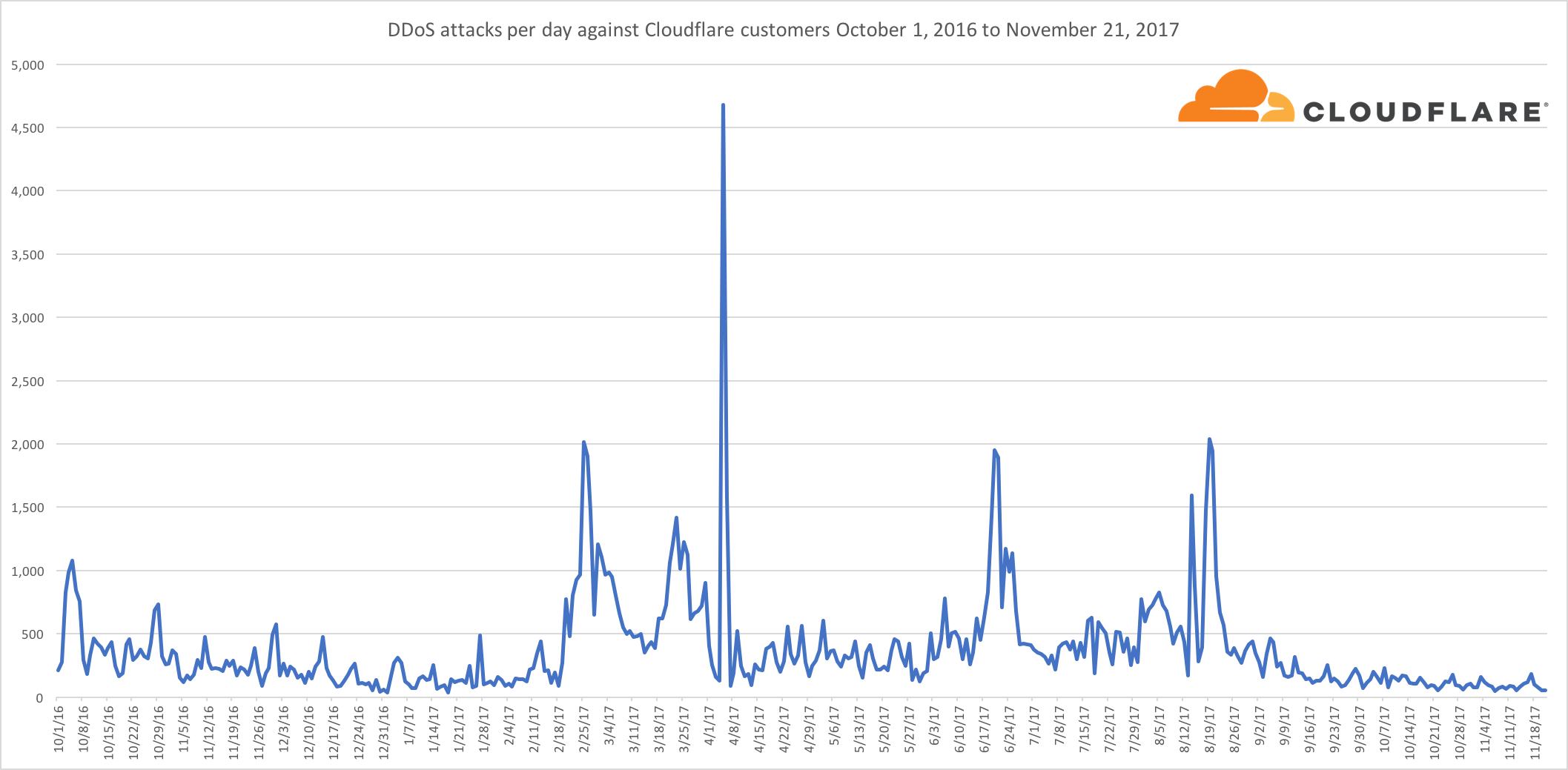

The chart below outlines DDoS attacks per day against Cloudflare customers; but it is important to bear in mind that this includes what we define as an attack. In recent times, Cloudflare has built specific products to help customers define what they think an attack looks like and how much traffic they feel they should cope with.

A Web Developers Guide to Defeating an Application Layer DDoS Attack

One of the reasons why Application Layer DDoS attacks are so attractive is due to the uneven balance between the relative computational overhead required to for someone to request a web page, and the computational difficulty in serving one. Serving a dynamic website requires all kinds of operations; fetching information from a database, firing off API requests to separate services, rendering a page, writing log lines and potentially even pushing data down a message queue.

Fundamentally, there are two ways of dealing with this problem:

making the balance between requester and server, less asymmetric by making it easier to serve web requests

limiting requests which are in such excess, they are blatantly abusive

It remains critical you have a high-capacity DDoS mitigation network in front of your web application; one of the reasons why Application Layer attacks are increasingly attractive to attackers because networks have gotten good at mitigating volumetric attacks at the network layer.

Cloudflare has found that whilst performing Application Layer attacks, attackers will sometimes pick cryptographic ciphers that are the hardest for servers to calculate and are not usually accelerated in hardware. What this means is the attackers will try and consume more of your servers resources by actually using the fact you offer encrypted HTTPS connections against you. Having a proxy in front of your web traffic has the added benefit of ensuring that it has to establish a brand new secure connection to your origin web server - effectively meaning you don't have to worry about Presentation Layer attacks.

Additionally, offloading services which don't have custom application logic (like DNS) to managed providers can help ensure you have less surface area to worry about at the Application Layer.

Aggressive Caching

One of the ways to make it easier to serve web requests is to use some form of caching. There are multiple forms of caching; however, here I'm going to be talking about how you enable caching for HTTP requests.

Suppose you're using a CMS (Content Management System) to update your blog, the vast majority of visitors to your blog will see the identical page to every other visitor. It will only be the when an anonymous visitor logs in or leaves a comment that they will see a page that's dynamic and unlike every other page that's been rendered.

Despite the vast majority of HTTP requests to specific URLs being identical, your CMS has to regenerate the page for every single request as if it was brand new. Application Layer DDoS attacks exploit this to amplification to make their attacks more brutal.

Caching proxies like NGINX and services like Cloudflare allow you to specify that until a user has a browser cookie that de-anonymises them, content can be served from cache. Alongside performance benefits, these configuration changes can prevent the most crude Application Layer DDoS Attacks.

For further information on this, you can consult NGINX guide to caching or alternatively see my blog post on caching anonymous page views:

Rate Limiting

Caching isn't enough; non-idempotent HTTP requests like POST, PUT and DELETE are not safe to cache - as such making these requests can bypass caching efforts used to prevent Application Layer DDoS attacks. Additionally, attackers can attempt to vary URLs to bypass advanced caching behaviour.

Software exists for web servers to be able to perform rate limiting before anything hits dynamic logic; examples of such tools include Fail2Ban and Apache mod_ratelimit.

If you do rate limiting on your server itself, be sure to configure your edge network to cache the rate limit block pages, such that attackers cannot perform Application Layer attackers once blocked. This can be done by caching responses with a 429 status code against a Custom Cache Key based on the IP Address.

Services like Cloudflare offer Rate Limiting at their edge network; for example Cloudflare Rate Limiting.

Conclusion

As the capacity of networks like Cloudflare continue to grow, attackers move from attempting DDoS attacks at the network layer to performing DDoS attacks targeted at applications themselves.

For applications to be resilient to DDoS attacks, it is no longer enough to use a large network. A large network must be complemented with tooling that is able to filter malicious Application Layer attack traffic, even when attackers are able to make such attacks look near-legitimate.