Fixing the mixed content problem with Automatic HTTPS Rewrites

CloudFlare aims to put an end to the unencrypted Internet. But the web has a chicken and egg problem moving to HTTPS.

Long ago it was difficult, expensive, and slow to set up an HTTPS capable web site. Then along came services like CloudFlare’s Universal SSL that made switching from http:// to https:// as easy as clicking a button. With one click a site was served over HTTPS with a freshly minted, free SSL certificate.

Boom.

Suddenly, the website is available over HTTPS, and, even better, the website gets faster because it can take advantage of the latest web protocol HTTP/2.

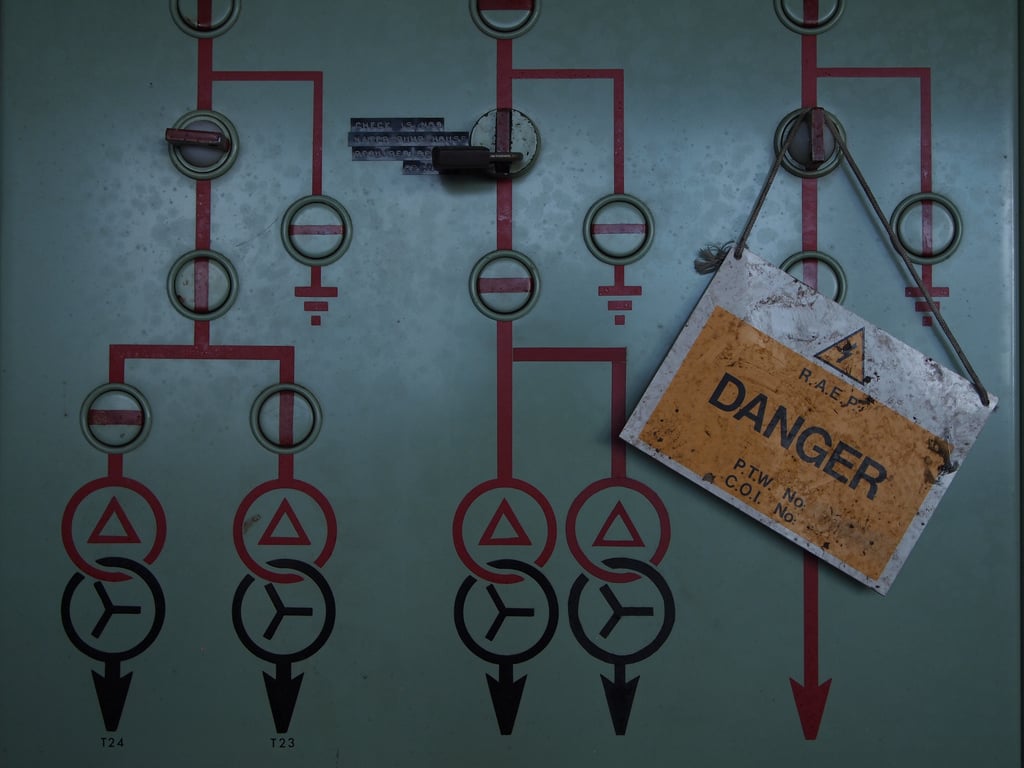

Unfortunately, the story doesn’t end there. Many otherwise secure sites suffer from the problem of mixed content. And mixed content means the green padlock icon will not be displayed for an https:// site because, in fact, it’s not truly secure.

Here’s the problem: if an https:// website includes any content from a site (even its own) served over http:// the green padlock can’t be displayed. That’s because resources like images, JavaScript, audio, video etc. included over http:// open up a security hole into the secure web site. A backdoor to trouble.

Web browsers have known this was a problem for a long, long time. Way back in 1997, Internet Explorer 3.0.2 warned users of sites with mixed content with the following dialog box.

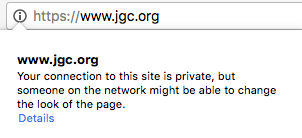

Today, Google Chrome shows a circled i on any https:// that has insecure content.

And Firefox shows a padlock with a warning symbol. To get a green padlock from either of these browsers requires every single subresource (resource loaded by a page) to be served over HTTPS.

If you had clicked Yes back in 1997, Internet Explorer would have ignored the dangers of mixed content and gone ahead and loaded subresources using plaintext HTTP. Clicking No prevented them from being loaded (often resulting in a broken but secure web page).

Transitioning to fully secure content

Its tempting, but naive, to think that the solution to mixed content is easy: “Simply load everything using https:// and just fix your website”. Unfortunately, the smörgåsbord of content loaded into modern websites from first-party and third-party web sites makes it very hard to ‘simply’ make that change.

CC BY 2.0 image by Mike Mozart

CC BY 2.0 image by Mike Mozart

Wired documented their transition to https:// in a series of blog posts that shows just how hard it can be to switch everything to HTTPS. They started in April and spent 5 months on the process (after having already prepped for months just to get to https:// on their main web site). In May they wrote about a snag:

"[…] one of the biggest challenges of moving to HTTPS is preparing all of our content to be delivered over secure connections. If a page is loaded over HTTPS, all other assets (like images and Javascript files) must also be loaded over HTTPS. We are seeing a high volume of reports of these “mixed content” issues, or events in which an insecure, HTTP asset is loaded in the context of a secure, HTTPS page. To do our rollout right, we need to ensure that we have fewer mixed content issues—that we are delivering as much of WIRED.com’s content as securely possible.”

In 2014, the New York Times identified mixed content as a major hurdle to going secure:

"To successfully move to HTTPS, all requests to page assets need to be made over a secure channel. It’s a daunting challenge, and there are a lot of moving parts. We have to consider resources that are currently being loaded from insecure domains — everything from JavaScript to advertisement assets.”

And the W3C recognized this as a huge problem saying: “Most notably, mixed content checking has the potential to cause real headache for administrators tasked with moving substantial amounts of legacy content onto HTTPS. In particular, going through old content and rewriting resource URLs manually is a huge undertaking.” And cited the BBC’s huge archive as a difficult example.

But it’s not just media sites that have a problem with mixed content. Many CMS users find it difficult or impossible to update all the links that their CMS generates as they may be buried in configuration files, source code or databases. In addition, sites that need to deal with user-generated content also face a problem with http:// URIs.

The Dangers of Mixed Content

Mixed content comes in two flavors: active and passive. Modern web browsers approach the dangers from these different types of mixed content as follows: active mixed content (the most dangerous) is automatically and completely blocked, passive mixed content is allowed through but results in a warning.

Active content is anything that can modify the DOM (the web page itself). Resources included via the , , or tags, CSS values that use url and anything requested using XMLHTTPRequest is capable of modifying a page, reading cookies and accessing user credentials.

Passive content is anything else: images, audio, video that are written into the page but that cannot themselves access the page.

Active content is a real threat because if an attacker manages to intercept the request for an http:// URI they can replace the content with their own. This is not a theoretical concern. In 2015 Github was attacked by a system dubbed the Great Cannon that intercepted requests for common JavaScript files over HTTP and replaced them with a JavaScript attack script. The Great Cannon weaponized innocent users’ machines by intercepting TCP and exploiting the inherent vulnerability in active content loaded from http:// URIs.

Passive content is a different kind of threat: because requests for passive content are sent in the clear an eavesdropper can monitor the requests and extract information. For example, a well positioned eavesdropper could monitor cookies, web pages visited and potentially authentication information.

The Firesheep Firefox add-on can be used to monitor a local network (for example, in a coffee shop) for requests sent over HTTP and automatically steal cookies allowing a user to hijack someone’s identity with a single click.

Today, modern browsers block active content that's loaded insecurely, but allow passive content through. Nevertheless, transitioning to all https:// is the only way to address all the security concerns of mixed content.

Fixing Mixed Content Automatically

We’ve wanted to help fix the mixed content properly for a long time as our goal is that the web become completely encrypted. And, like other CloudFlare services, we wanted to make this a ‘one click’ experience.

CC BY 2.0 image by Anthony Easton

CC BY 2.0 image by Anthony Easton

We considered a number of approaches:

Automatically insert the upgrade-insecure-requests CSP directive

The upgrade-insecure-requests directive can be added in a Content Security Policy header like this:

Content-Security-Policy: upgrade-insecure-requests

which instructs the browser to automatically upgrade any HTTP request to HTTPS. This can be useful if the website owner knows that every subresource is available over HTTPS. The website will not have to actually change http:// URIs embedded in the website to https://, the browser will take care of that automatically.

Unfortunately, there is a large downside to upgrade-insecure-requests. Since the browser blindly upgrades every URI to https:// regardless of whether the resulting URI will actually work pages can be broken.

Modify all links to use https://

Since not all browsers used by visitors to CloudFlare web sites support upgrade-insecure-requests we considered upgrading all http:// URIs to https:// as pages pass through our service. Since we are able to parse and modify web pages in real-time we could have created an ‘upgrade-insecure-requests’ service that did not rely on browser support.

Unfortunately, that still suffers from the same problem of broken links when an http:// URI is transformed to https:// but the resource can’t actually be loaded using HTTPS

Modify links that point to other CloudFlare sites

Since CloudFlare gives all our 4 million customers free Universal SSL and we cover a large percentage of web traffic we considered just upgrading http:// to https:// for URIs that we know (because they use our service) will work.

This solves part of the problem but isn’t a good solution for the general problem of upgrading from HTTP to HTTPS

Create a system that rewrites known HTTPS capable URIs

Finally, we settled upon doing something smart: upgrade a URI from http:// to https:// if we know that the resource can be served using HTTPS. To figure out which links are upgradable we turned to the EFF’s excellent HTTPS Everywhere extension and Google Chrome HSTS preload list to augment our knowledge of CloudFlare sites that have SSL enabled.

We are very grateful that the EFF graciously accepted to help us with this project.

The HTTPS Everywhere ruleset goes far beyond just switching http:// to https://: it contains rules (and exclusions) that allow it (and us) to target very specific URIs. For example, here’s an actual HTTP Everywhere rule for gstatic.com:

It uses a regular expression to identify specific subdomains of gstatic.com that can safely be upgraded to use HTTPS. The complete set of rules can be browsed here.

Because we need to upgrade a huge number of URIs embedded in web pages (we estimate around 5 million per second) we benchmarked existing HTML parsers (including our own) and decided to write a new one for this type of rewriting task. We’ll write more about its design, testing and performance in a future post.

Automatic HTTPS Rewrites

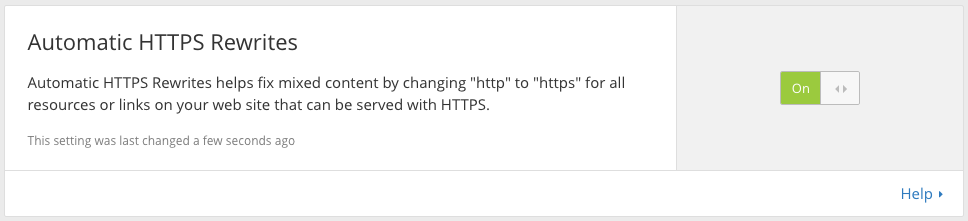

Automatic HTTPS Rewrites are now available in the customer dashboard for all CloudFlare customers. Today, this feature is disabled by default and can be enabled in ‘one click’:

We will be monitoring the performance and effectiveness of this feature and enable it by default for Free and Pro customers later in the year. We also plan to use the Content Security Policy reporting features to give customers an automatic view of which URIs remain to be upgraded so that their transition to all https:// is made as simple as possible: sometimes just finding which URIs need to be changed can be very hard as Wired found out.

Would love to hear how this feature works out for you.